Multiverse 2.357

paused to apply patches

In our previous posts we discussed using physical identifiers including facial recognition and other physical characteristics like eyes, fingerprints and veins for authentication and identification. But how does that help providers of online services know who is using the service? The answer might involve behavioral biometrics.

Behavioral biometrics refers to specific behaviors that are tied to individual identities. We tend to exhibit repeating patterns of movement, whether we realize it or not, and react to certain situations in specific, measurable and predictable ways.

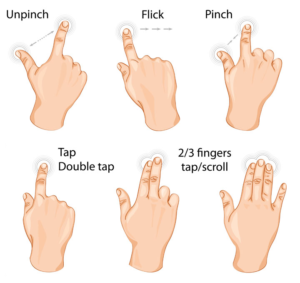

You swipe, scroll, tap, hold your phone, type, navigate between form fields, press and hold, click on tiny links, etc. in a certain way. Which fingers do you use and at what angle is the phone when you do specific things? If taken collectively they can be thought of as a distinct pattern that represents you. This is the essence of behavioral biometrics.

The strongest indicators of all are those that the user is unaware of. How does the user react when a swipe doesn’t seem to complete, or the display briefly freezes? There might be movement of the fingers or moving of the phone every time these things happen, and different users may react in different ways.

For example, how exactly do you swipe when an annoying ad pops up? How many times do you tap on a button on your phone screen if an annoying popup won’t go away? Do you shake your phone when annoyed by a popup ad, or move it up rapidly to meet your finger? How do you hold your phone normally, in stressful conditions, and when you are prompted for action? Sensors in smartphone can distinguish minor movement details like these, and now companies have begun to use these data to identify you by your behavior.

Perhaps when holding your fingertip on something waiting for a menu to pop up did not work right away once, the user is trained to hold it down long the next time. There are so many subtle behaviors that can be measured. The trick is to find those measurable actions that vary from user to user but are consistent for a given user.

The same sort of techniques can be used for desktop users as well. How does a user tend to move their mouse around when reading, or navigating, or looking for a button to press? How does the user move the mouse when a page appears stuck or the mouse cursor disappears from the screen? Does the user’s typing speed or mouse movement speed change under certain conditions?

This technology might be used to protect people, as is so often the case for new technologies. These techniques can be used, for example, to ensure that an authenticated user who has been granted access to some sensitive resource is still the same user who currently operates the device or interacts with the website.

Banks are Interested

A bank might be interested in behaviors like how long it takes you to bring focus to and fill out form fields for security questions. A person who knows data points like mother’s maiden name might navigate into the form field and begin typing right away, while an unauthorized person may take a few moments to look up the answer. Perhaps this is a better indicator than actually knowing the answer!

The Royal Bank of Scotland was an early adopter of this technology, starting down this path in 2016 for their high net worth customers. Since then they have continued to embrace behavioral biometrics, now using it for all their business and retail customers. Other banks like NatWest are doing the same.

They use software from BioCatch to monitor more than 2000 movements on the keyboard, mouse, or mobile device. This use case is compelling, and currently there are loads of new entrants into the space which has some formidable competitors already, like IBM. BioCatch is using “Invisible Challenges”, a collection of slightly unusual events for which they can measure the subtle reactions of the user.

Banks are likely to continue this adoption trend, because traditional ways of securing customer accounts need to be protected. For every cybersecurity solution, there are clever thieves who will find ways of defeating them. PINs and passwords have been the staples of authentication for years. Verifying devices identifiers and customer location, and even biometric authentication have been mainstays of the banking industry, but the one thing that does not seem possible to fake is behavioral biometrics.

Banks are not the only entities interested in behavioral biometrics of course. More e-commerce companies are expected to use this technology in the coming years, along with a variety of high security applications. There is plenty of interest in military application areas as well. For example, ensuring an approved person is operating a weapon system.

What Are the Privacy Implications?

Nefarious uses are going to crop up as well; this is not just a tool to combat fraud and unauthorized access. Suppose an insurance company is interested in tracking your hand-eye coordination? Does an increase in hand shaking or other diminishment in motor skills over time indicate increased likelihood of a serious medical condition?

There are surely many gray areas, and this behavioral data collection and analysis is completely unregulated at this time. Some large retailers are thought to be using this information about your typing and tapping behavior to infer things about your emotional state, and thus your likelihood to buy or be influenced by aggressive or sensitive advertising.

At the extreme end of of this rabbit hole there are dystopian scenarios that even Orwell would surely find disturbing. It seems perfectly reasonable to assume that insurance underwriters would be interested in having additional insights into behavioral patterns of drivers, and personal behavior patterns in our smart homes. We might imagine a day when smart cities use clues based on occupants’ behavior to make educated guesses about possible undesirable behavior before it occurs.

So the next time you see something unusual on a retailer’s login page, or a bank web page asking you your security questions, be aware that your mouse or finger movements might be under close scrutiny as an unofficial second type of authentication. Some high profile sites have fraud rates on their login pages that are staggering, and this is a technique for them to combat the fraudulent activity without raising the suspicions of would-be attackers.

It is nevertheless troubling from a privacy perspective, since very personal inferences can be made. There is zero disclosure as far as this author can tell, and there are no known regulations regarding the collection, sharing, deletion or storage of such data. You have no right to access your profile data in this case, and no ability to even know this data is being collected.

Companies show signs of wanting to capture massive amounts of behavioral data, presumably since they can, and look for divergences in the patterns. These “continuous authentication” techniques are areas of current research. We should probably expect companies to gather as much information on a continuous basis as they can. Ideally technologies would enable us to choose what to share and with whom.

These systems work because devices have so many sensors to gather data. As device continue to incorporate more and different type of sensors we should expect to face increasing challenges around preventing excessive data collection by those who would abuse this capability.

For example, these systems may prove useful in combatting the insider threat faced by companies by noticing subtle changes in employee behavior. They might also prove to generate false positives, triggered by unrelated employee anxiety. It raises troubling questions about surveillance in the workplace as well.